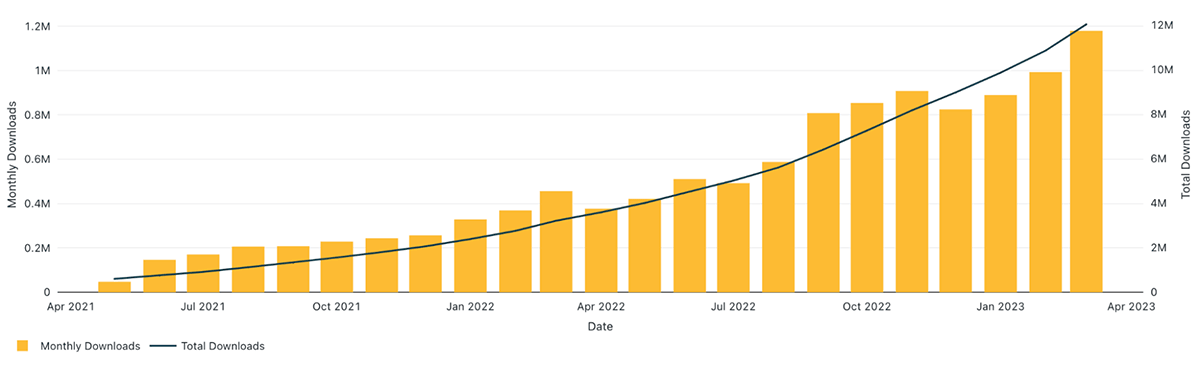

The Databricks Terraform service provider reached more than 10 million setups, considerably increasing adoption considering that it ended up being normally offered less than one year earlier.

This substantial turning point showcases that Terraform and the Databricks service provider are commonly utilized by lots of clients to automate facilities implementation and management of their Lakehouse Platform.

To quickly keep, handle and scale their facilities, DevOps groups construct their facilities utilizing modular and multiple-use elements called Terraform modules Terraform modules enable you to quickly recycle the very same elements throughout numerous usage cases and environments. It likewise assists implement a standardized technique of specifying resources and embracing finest practices throughout your company. Not just does consistency guarantee finest practices are followed, it likewise assists to implement certified implementation and prevent unintentional misconfigurations, which might cause expensive mistakes.

Presenting Terraform Databricks modules

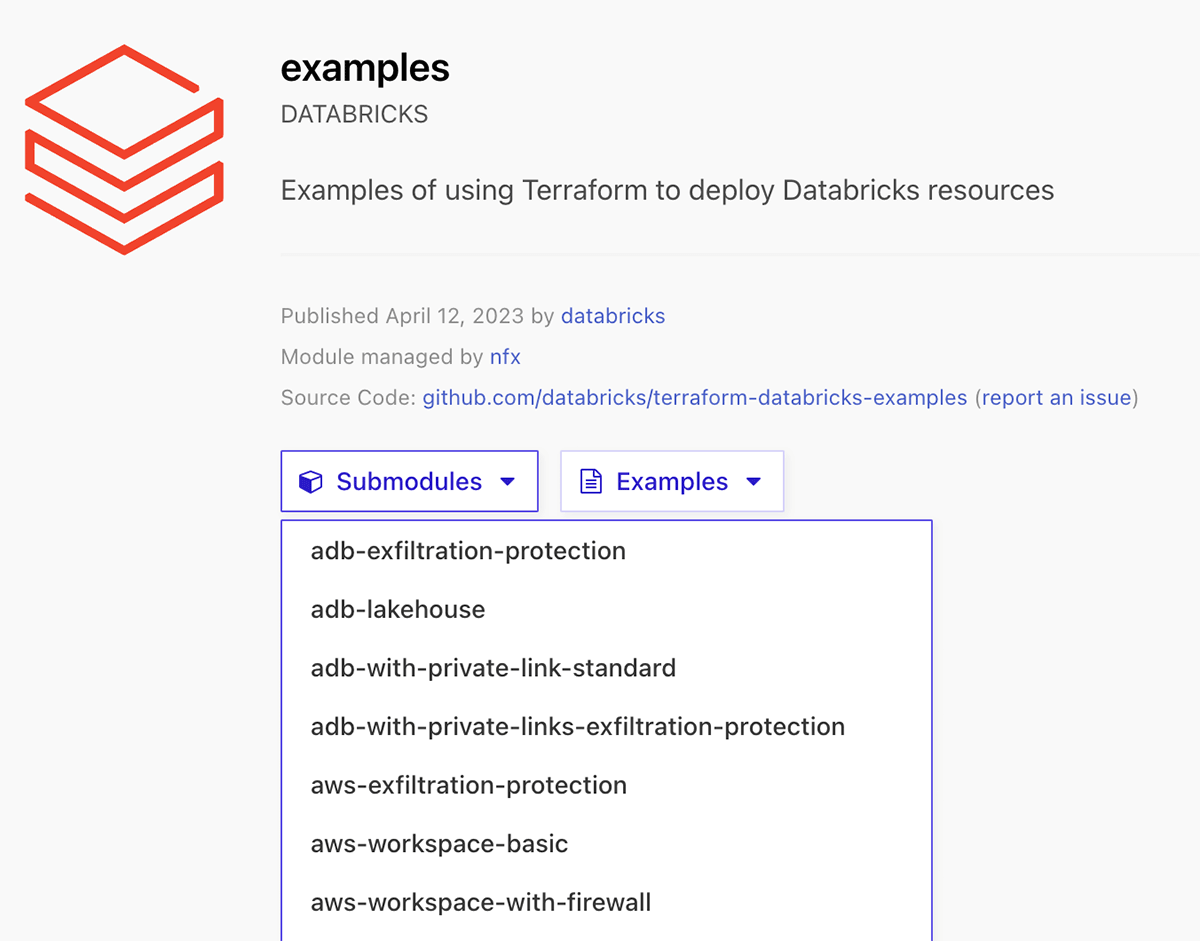

To assist clients test and release their Lakehouse environments, we’re launching the speculative Terraform Computer system registry modules for Databricks, a set of more than 30 multiple-use Terraform modules and examples to arrangement your Databricks Lakehouse platform on Azure, AWS, and GCP utilizing Databricks Terraform service provider It is utilizing the material in terraform-databricks-examples github repository.

There are 2 methods to utilize these modules:

- Usage examples as a recommendation for your own Terraform code.

- Straight reference the submodule in your Terraform setup.

The complete set of offered modules and examples can be discovered in Terraform Computer system registry modules for Databricks

Starting with Terraform Databricks examples

To utilize among the various offered Terraform Databricks modules, you need to follow these actions:

- Referral this module utilizing among the various module source types

- Include a

variables.tffile with the needed inputs for the module - Include a

terraform.tfvarsfile and supply worths to each specified variable - Include an

output.tffile with the module outputs - ( Highly advised) Configure your remote backend

- Run

terraform initto initialize terraform and to download the required companies. - Run

terraform validateto confirm the setup files in your directory site. - Run

terraform strategyto sneak peek the resources that Terraform prepares to develop. - Run

terraform useto develop the resources.

Example walkthrough

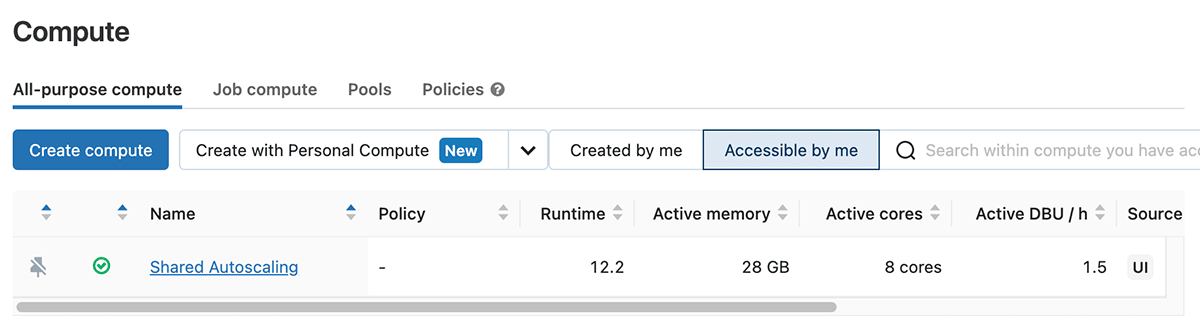

In this area we show how to utilize the examples offered in the Databricks Terraform computer system registry modules page. Each example is independent of each other and has a devoted README.md. We now utilize this Azure Databricks example adb-vnet-injection to release a VNet-injected Databricks Office with a car scaling cluster.

Action 1: Verify to the companies.

Browse to providers.tf to look for companies utilized in the example, here we require to set up authentication to Azure service provider and Databricks companies. Check out the following docs for comprehensive info on how to set up authentication to companies:

Action 2: Go through the readme of the example, prepare input worths.

The identity that you utilize in az login to release this design template must have a factor function in your azure membership, or the minimum needed approvals to release resources in this design template.

Then do following actions:

- Run

terraform initto initialize terraform and download needed companies. - Run

terraform strategyto see what resources will be released. - Run

terraform useto release resources to your Azure environment.

Given that we utilizedaz logintechnique to confirm to companies, you will be triggered to login to Azure by means of internet browser. Get Inyeswhen triggered to release resources.

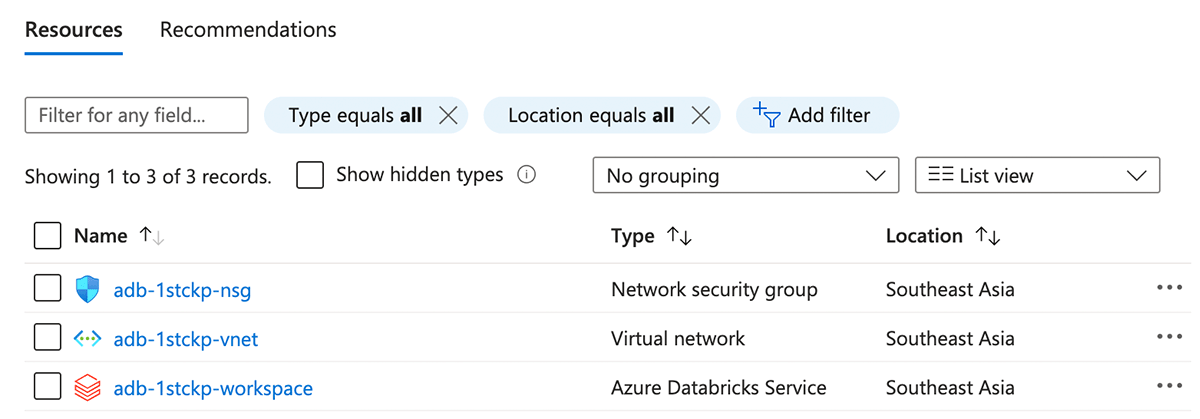

Action 3: Validate that resources were released effectively in your environment.

Browse to Azure Website and validate that all resources were released effectively. You need to now have a vnet-injected office with one cluster released.

You can discover more examples and their requirements, service provider authentications in this repository under/ examples.

How to contribute

Terraform-databricks-examples material will be continually upgraded with brand-new modules covering various architectures and likewise more functions of the Databricks platform.

Keep In Mind that it is a neighborhood task, established by Databricks Field Engineering and is offered as-is. Databricks does not use main assistance. In order to include brand-new examples or brand-new modules, you can add to the task by opening a brand-new pull-request. For any concern, please open a concern under the terraform-databricks-examples repository.

Please check out the existing list of offered modules and examples and attempt them to release your Databricks facilities!