Every early morning Susan strolls directly into a storm of messages, and does not understand where to begin! Susan is a consumer success professional at a worldwide merchant, and her main goal is to make sure consumers more than happy and get customised service whenever they experience concerns.

Overnight the business gets numerous evaluations and feedback throughout numerous channels consisting of sites, apps, social networks posts, and e-mail. Susan begins her day by logging into each of these systems and getting the messages not yet gathered by her coworkers. Next, she needs to understand these messages, recognize what requires to be reacted to, and develop a reaction for the client. It isn’t simple due to the fact that these messages are frequently in various formats and every client reveals their viewpoints in their own distinct design.

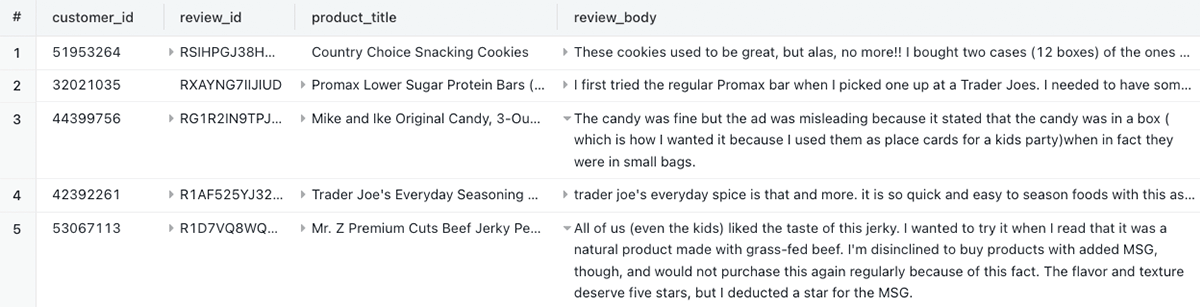

Here’s a sample of what she needs to handle (for the functions of this post, we’re using extracts from Amazon’s client evaluation dataset)

Susan worries due to the fact that she understands she isn’t constantly translating, classifying, and reacting to these messages in a constant way. Her most significant worry is that she might unintentionally miss out on reacting to a consumer due to the fact that she didn’t effectively translate their message. Susan isn’t alone. Much of her coworkers feel by doing this, as do most fellow customer support agents out there!

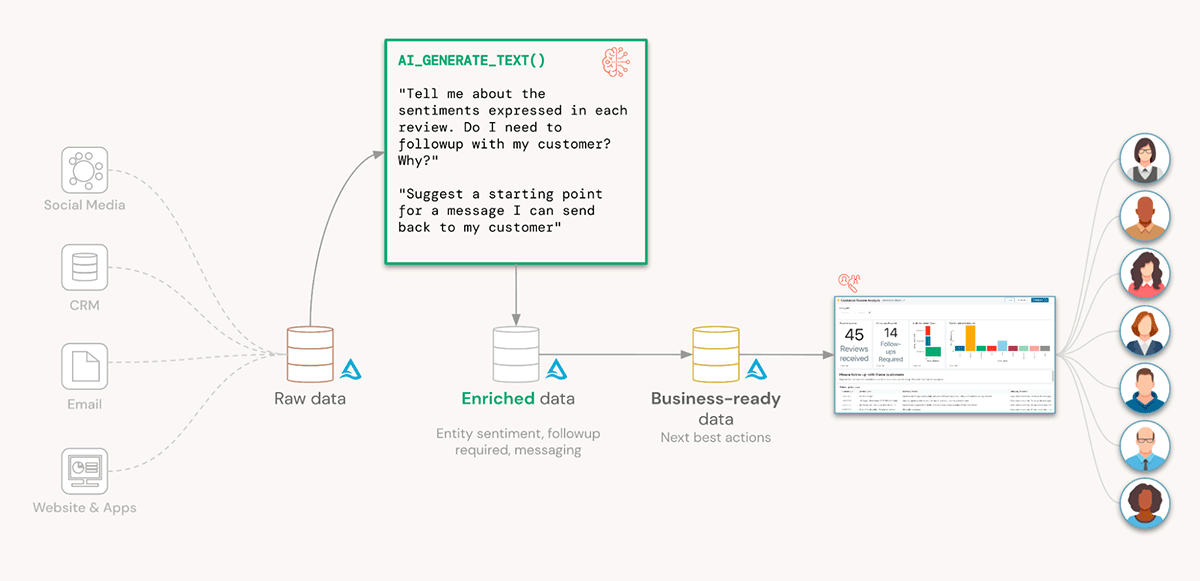

The obstacle for sellers is how do they aggregate, evaluate, and action this freeform feedback in a prompt way? An excellent initial step is leveraging the Lakehouse to flawlessly collect all these messages throughout all these systems into one location. However then what?

Go Into LLMs

Big language designs (LLMs) are ideal for this situation. As their name indicates, they are extremely efficient in understanding intricate disorganized text. They are likewise skilled at summing up crucial subjects gone over, identifying belief, and even producing actions. Nevertheless, not every company has the resources or know-how to establish and keep its own LLM designs.

Fortunately, in today’s world, we have LLMs we can take advantage of as a service, such as Azure OpenAI’s GPT designs. The concern then ends up being: how do we use these designs to our information in the Lakehouse?

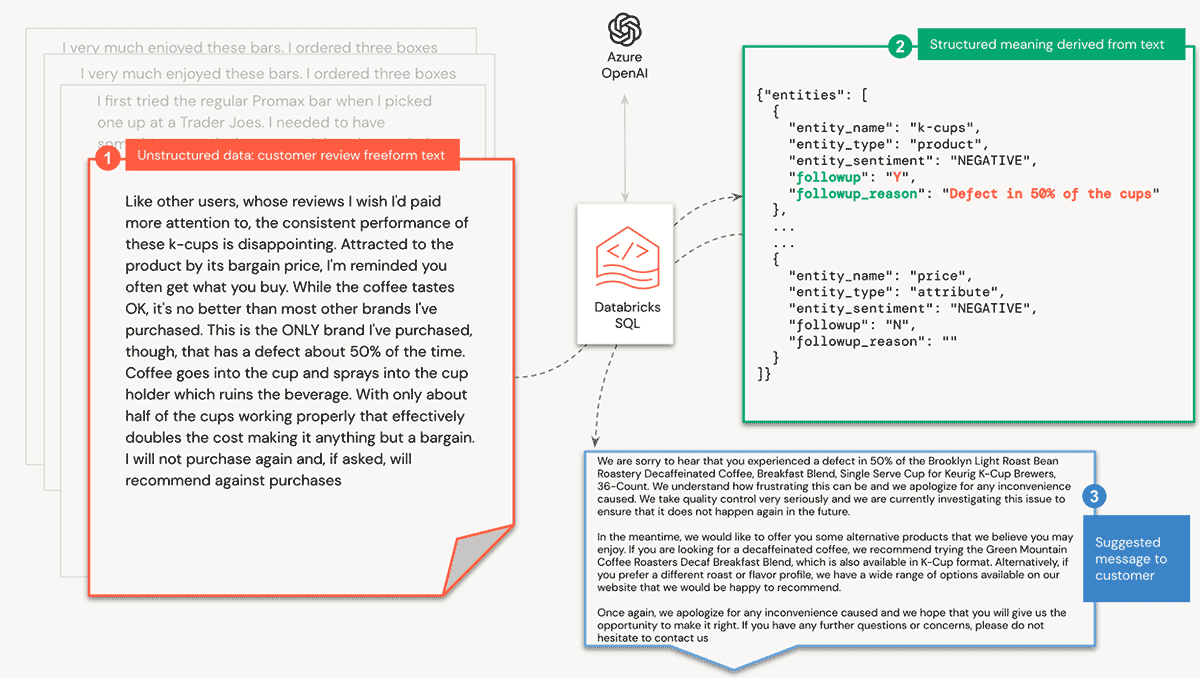

In this walkthrough, we’ll reveal you how you can use Azure OpenAI’s GPT designs to disorganized information that is living in your Databricks Lakehouse and wind up with well-structured queryable information. We will take client evaluations, recognize subjects gone over, their belief, and identify whether the feedback needs a reaction from our client success group. We’ll even pre-generate a message for them!

The issues that require to be fixed for Susan’s business consist of:

- Using an easily offered LLM that likewise has business assistance and governance

- Produce constant significance versus freeform feedback

- Identifying if a next action is needed

- Most significantly, enable experts to engage with the LLM utilizing familiar SQL abilities

Walkthrough: Databricks SQL AI Functions

AI Functions streamlines the complicated job of obtaining significance from disorganized information. In this walkthrough, we’ll take advantage of a release of an Azure OpenAI design to use conversational reasoning to freeform client evaluations.

Pre-requisites

We require the following to begin

- Register for the SQL AI Functions public sneak peek

- An Azure OpenAI secret

- Shop the type in Databricks Tricks (documents: AWS, Azure, GCP)

- A Databricks SQL Pro or Serverless storage facility

Prompt Style

To get the very best out of a generative design, we require a well-formed timely (i.e. the concern we ask the design) that offers us with a significant response. Additionally, we require the action in a format that can be quickly packed into a Delta table. Luckily, we can inform the design to return its analysis in the format of a JSON item.

Here is the timely we utilize for identifying entity belief and whether the evaluation needs a follow-up:

A consumer left an evaluation. We follow up with anybody who appears dissatisfied.

Extract all entities discussed. For each entity:

- categorize belief as ["POSITIVE", "NEUTRAL", "NEGATIVE"]

- whether client needs a follow- up: Y or N.

- factor for needing followup.

Return JSON ONLY. No other text outside the JSON. JSON format:.

{

entities: [{

"entity_name": <entity name>,

"entity_type": <entity type>,

"entity_sentiment": <entity sentiment>,

"followup": <Y or N for follow up>,

"followup_reason": <reason for followup>

}]

}

Evaluation:.

Like other users, whose evaluations I want I 'd paid more attention to, the.

constant efficiency of these k-cups is frustrating. Brought in to the.

item by its deal cost, I' m advised you frequently get what you purchase. While.

the coffee tastes OK, it's no much better than the majority of other brand names I' ve bought.

This is the ONLY brand name I have actually bought, though, that has a flaw about 50%.

of the time. Coffee enters into the cup and sprays into the cup holder which.

ruins the drink. With just about half of the cups working effectively that.

successfully doubles the expense making it anything however a deal. I will not.

purchase once again and, if asked, will advise versus purchases.Running this by itself offers us a reaction like

{

" entities": [

{

"entity_name": "k-cups",

"entity_type": "product",

"entity_sentiment": "NEGATIVE",

"followup": "Y",

"followup_reason": "Defect in 50% of the cups"

},

{

"entity_name": "coffee",

"entity_type": "product",

"entity_sentiment": "NEUTRAL",

"followup": "N",

"followup_reason": ""

},

{

"entity_name": "price",

"entity_type": "attribute",

"entity_sentiment": "NEGATIVE",

"followup": "N",

"followup_reason": ""

}

]

} Likewise, for producing a reaction back to the client, we utilize a timely like

A consumer of ours was dissatisfied about << item name> > particularly.

about << entity> > due to << factor>>. Supply an understanding message I can.

send out to my client consisting of the deal to have a call with the appropriate.

item supervisor to leave feedback. I wish to recover their favour and.

I do not desire the client to churnAI Functions

We’ll utilize Databricks SQL AI Functions as our user interface for connecting with Azure OpenAI. Using SQL offers us with 3 crucial advantages:

- Convenience: we bypass the requirement to execute customized code to user interface with Azure OpenAI’s APIs

- End-users: Experts can utilize these functions in their SQL inquiries when dealing with Databricks SQL and their BI tools of option

- Note pad designers: can utilize these functions in SQL cells and spark.sql() commands

We initially develop a function to manage our triggers. We have actually saved the Azure OpenAI API type in a Databricks Trick, and recommendation it with the TRICK() function. We likewise pass it the Azure OpenAI resource name ( resourceName) and the design’s release name ( deploymentName). We likewise have the capability to set the design’s temperature level, which manages the level of randomness and imagination in the produced output. We clearly set the temperature level to 0 to reduce randomness and increase repeatability

-- Wrapper function to deal with all our calls to Azure OpenAI

-- Experts who desire to usage approximate triggers can usage this handler

PRODUCE OR REPLACE FUNCTION PROMPT_HANDLER( timely STRING).

RETURNS STRING

RETURN AI_GENERATE_TEXT( timely,.

" azure_openai/ gpt-35-turbo",.

" apiKey", TRICK(" tokens", " azure-openai"),.

" temperature level", CAST( 0.0 AS DOUBLE),.

" deploymentName", " llmbricks",.

" apiVersion", " 2023-03-15-preview",.

" resourceName", " llmbricks"

);Now we develop our very first function to annotate our evaluation with entities (i.e. subjects gone over), entity beliefs, whether a follow-up is needed and why. Given that the timely will return a well-formed JSON representation, we can advise the function to return a STRUCT type that can quickly be placed into a Delta table

-- Extracts entities, entity belief, and whether follow-up is needed from a consumer evaluation.

-- Given That we're getting a well-formed JSON, we can parse it and return a STRUCT information type for much easier querying downstream.

PRODUCE OR CHANGE FUNCTION ANNOTATE_REVIEW( evaluation STRING).

RETURNS STRUCT<< entities: VARIETY<< STRUCT<< entity_name: STRING, entity_type: STRING, entity_sentiment: STRING, followup: STRING, followup_reason: STRING>>>> > >.

RETURN FROM_JSON(.

PROMPT_HANDLER( CONCAT(.

' A consumer left an evaluation. We follow up with anybody who appears dissatisfied.

Extract all entities discussed. For each entity:.

- categorize belief as ["POSITIVE","NEUTRAL","NEGATIVE"]

- whether client needs a follow-up: Y or N.

- factor for needing followup.

Return JSON ONLY. No other text outside the JSON. JSON format:.

{

entities: [{

"entity_name": <entity name>,

"entity_type": <entity type>,

"entity_sentiment": <entity sentiment>,

"followup": <Y or N for follow up>,

"followup_reason": <reason for followup>

}]

}

Evaluation:.

', evaluation)),.

"STRUCT<< entities: VARIETY<< STRUCT<< entity_name: STRING, entity_type: STRING, entity_sentiment: STRING, followup: STRING, followup_reason: STRING>>>> > >".

);We develop a comparable function for producing a reaction to problems, consisting of suggesting alternative items to attempt

-- Produce a reaction to a consumer based upon their grievance

PRODUCE OR REPLACE FUNCTION GENERATE_RESPONSE( item STRING, entity STRING, factor STRING).

RETURNS STRING.

COMMENT "Produce a reaction to a consumer based upon their grievance".

RETURN PROMPT_HANDLER(.

CONCAT(" A consumer of ours was dissatisfied about ", item,.

" particularly about ", entity," due to ", factor, ". Supply an understanding.

message I can send out to my client consisting of the deal to have a call with.

the appropriate item supervisor to leave feedback. I wish to recover their.

favour and I do not desire the client to churn"));We might conclude all the above reasoning into a single timely to reduce API calls and latency. Nevertheless, we advise decaying your concerns into granular SQL functions so that they can be recycled for other situations within your organisation.

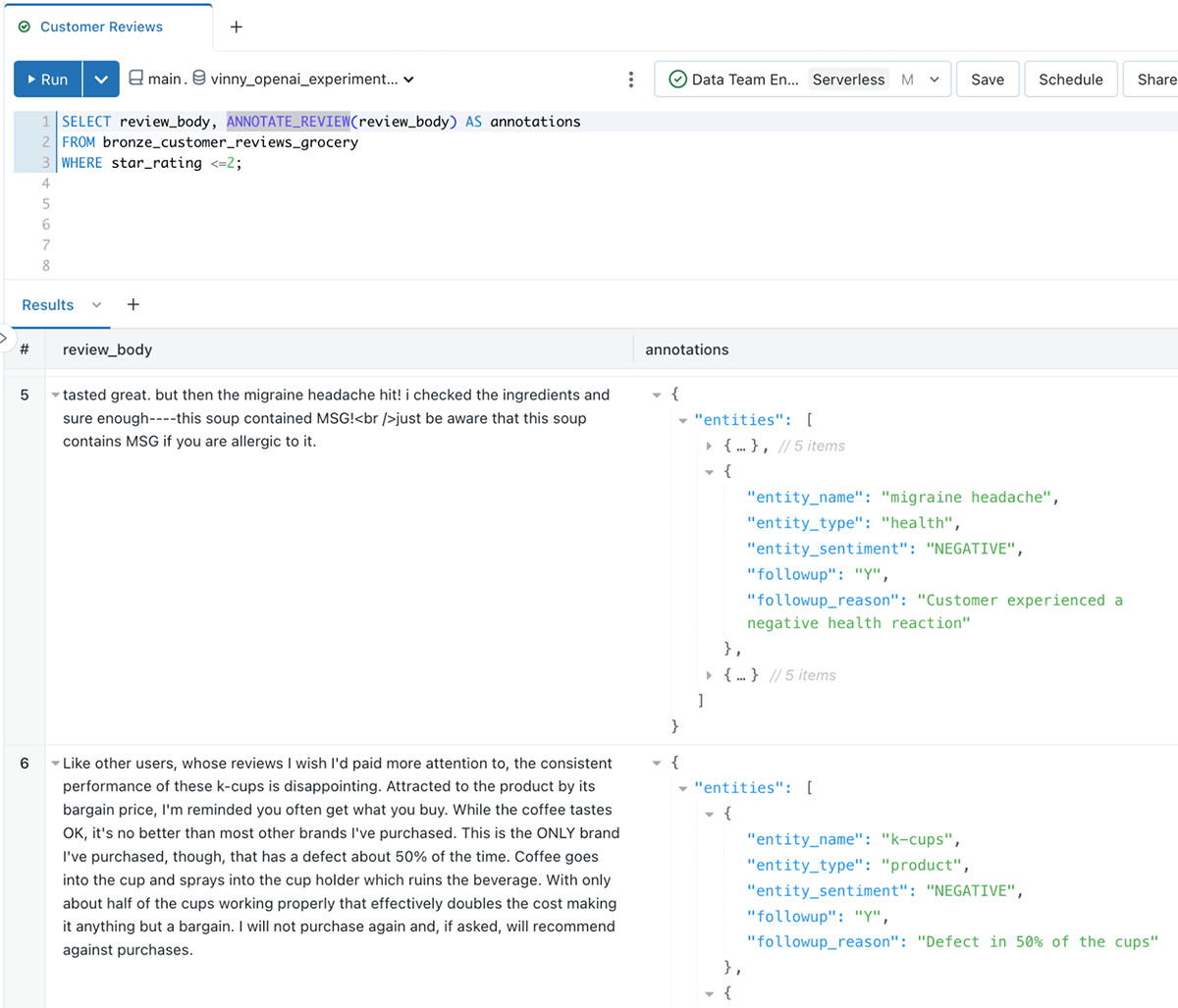

Evaluating client evaluation information

Now let’s put our functions to the test!

pick review_body, ANNOTATE_REVIEW( review_body) AS annotations.

FROM customer_reviewsThe LLM function returns well-structured information that we can now quickly query!

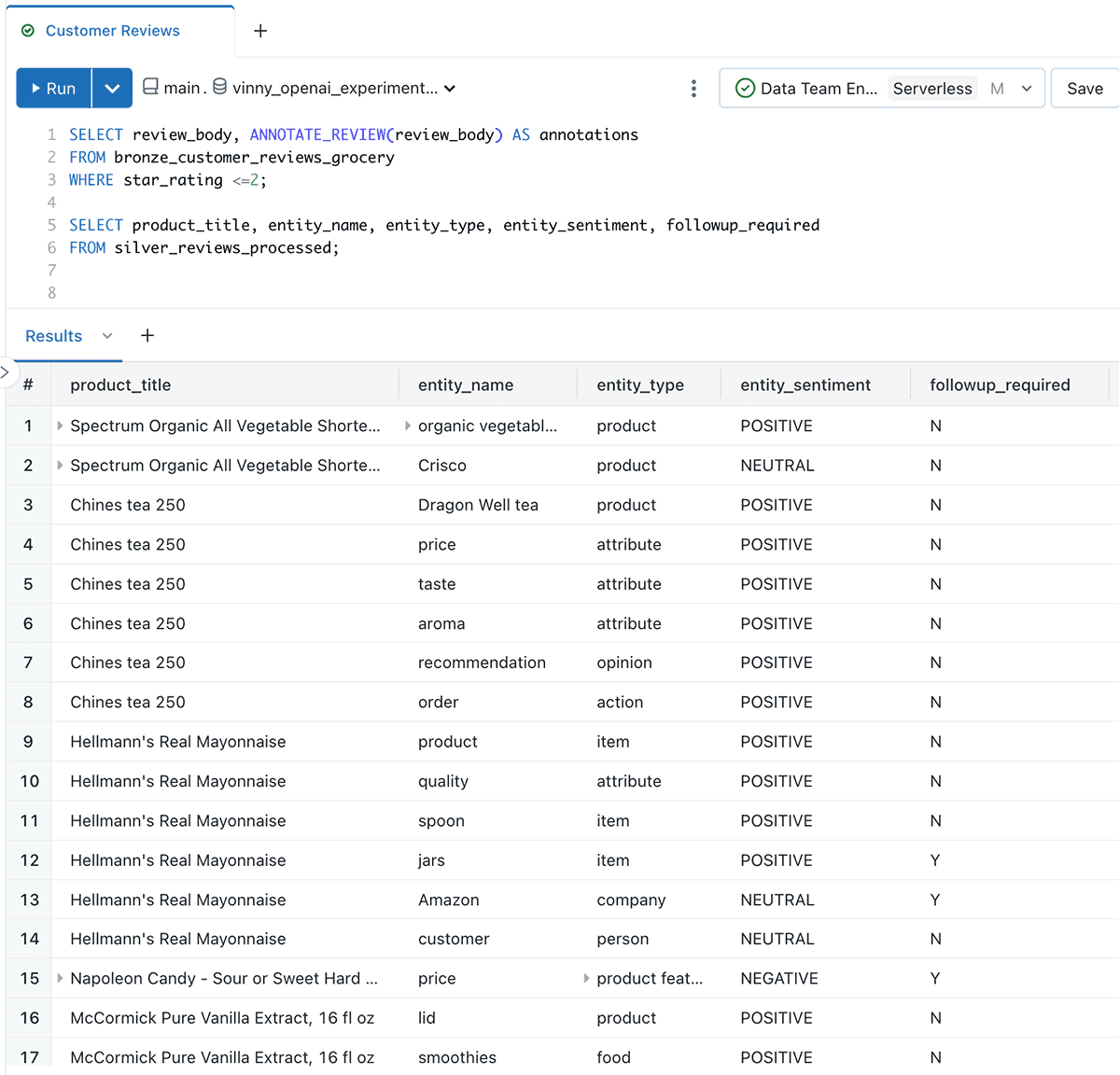

Next we’ll structure the information in a format that is more quickly queried by BI tools:

PRODUCE OR REPLACE TABLE silver_reviews_processed.

AS

WITH blew up AS (.

SELECT * EXCEPT( annotations),.

BLOW UP( annotations.entities) AS entity_details.

FROM silver_reviews_annotated.

).

SELECT * EXCEPT( entity_details),.

entity_details. entity_name AS entity_name,.

LOWER( entity_details. entity_type) AS entity_type,.

entity_details. entity_sentiment AS entity_sentiment,.

entity_details. followup AS followup_required,.

entity_details. followup_reason AS followup_reason.

FROM blew upNow we have numerous rows per evaluation, with each row representing the analysis of an entity (subject) gone over in the text

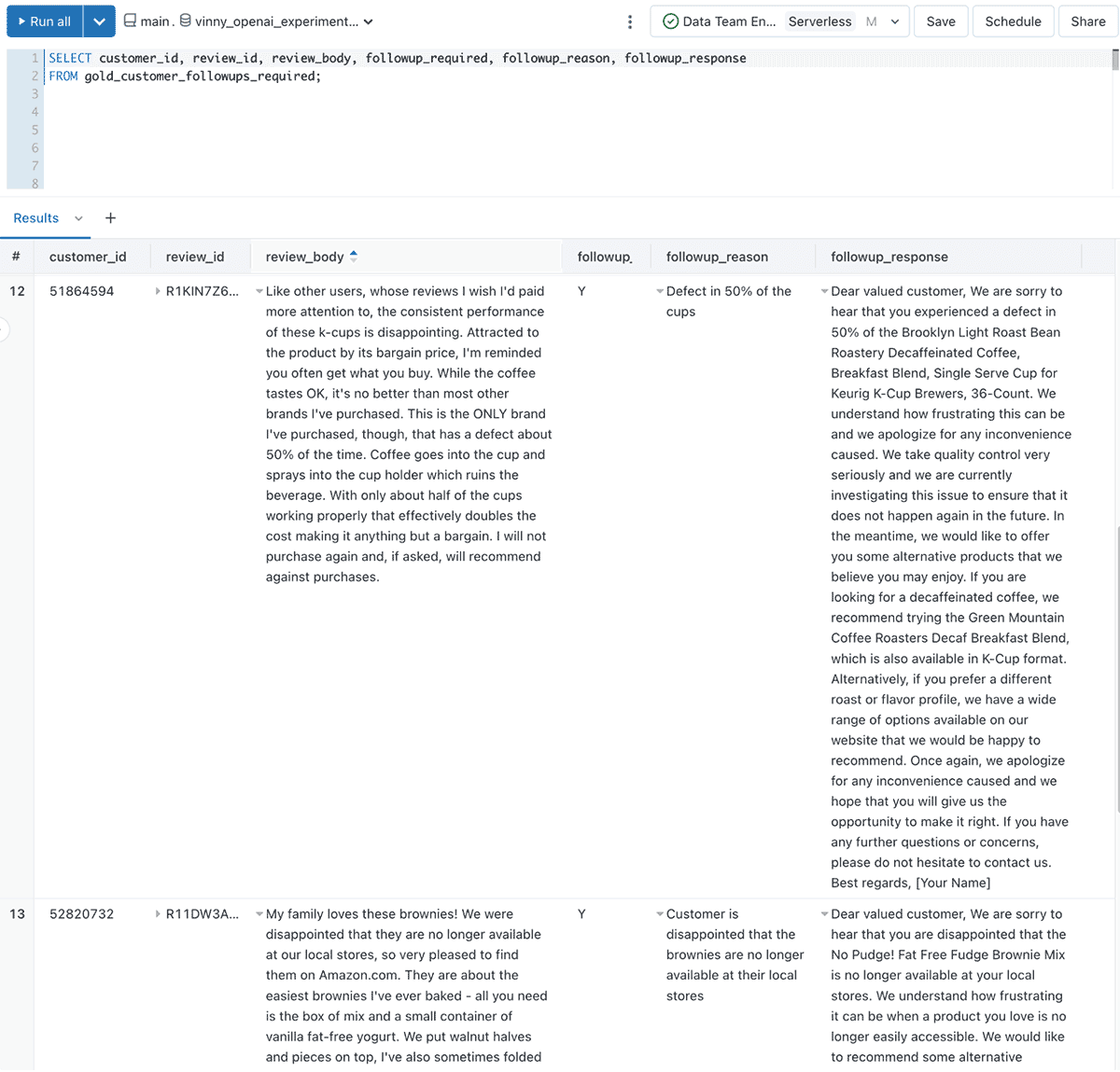

Developing action messages for our client success group

Let’s now develop a dataset for our client success group where they can recognize who needs a reaction, the factor for the action, and even a sample message to begin them off

-- Produce a reaction to a consumer based upon their grievance

PRODUCE OR REPLACE TABLE gold_customer_followups_required.

AS

SELECT *, GENERATE_RESPONSE( product_title, entity_name, followup_reason) AS followup_response.

FROM silver_reviews_processed.

WHERE followup_required = "Y"The resulting information appears like

As client evaluations and feedback stream into the Lakehouse, Susan and her group bypasses the labour-intensive and error-prone job of by hand examining each piece of feedback. Rather, they now invest more time on the high-value job of thrilling their consumers!

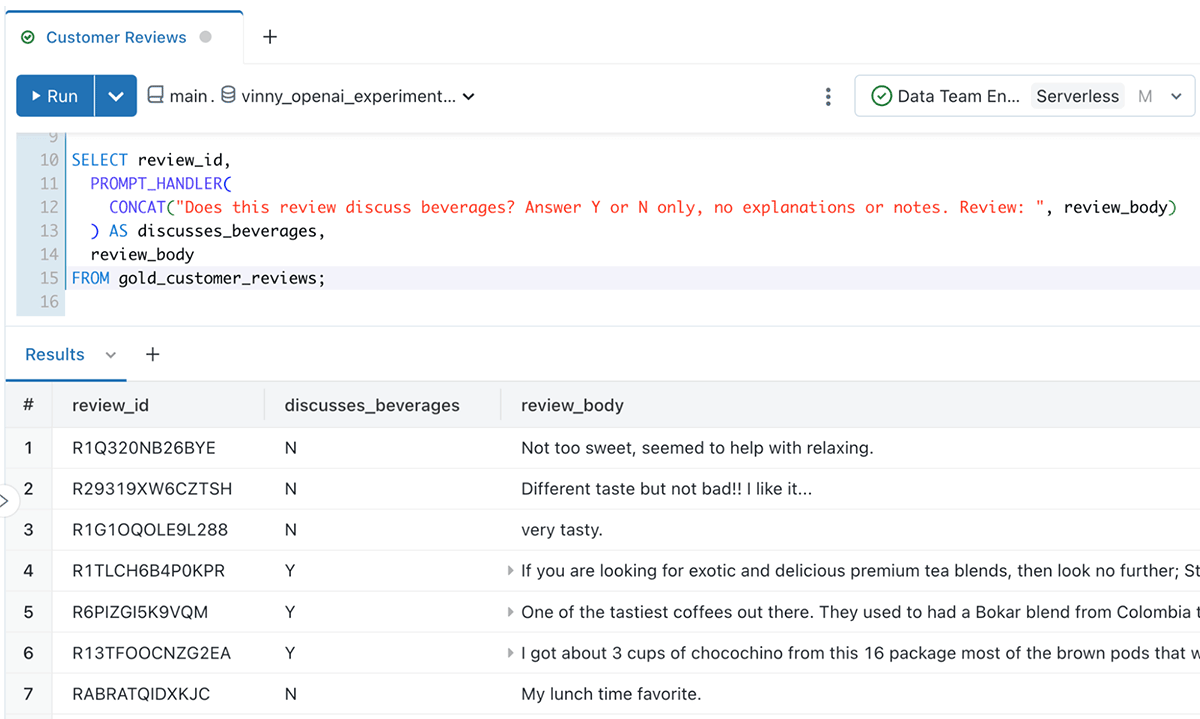

Supporting ad-hoc inquiries

Experts can likewise develop ad-hoc inquiries utilizing the PROMPT_HANDLER() function we developed previously. For instance, an expert may be thinking about comprehending whether an evaluation talks about drinks:

pick review_id,.

PROMPT_HANDLER( CONCAT(" Does this evaluation talk about drinks?

Response Y or N just, no descriptions or notes. Evaluation: ", review_body)).

AS discusses_beverages,.

review_body.

FROM gold_customer_reviews

From disorganized information to evaluated information in minutes!

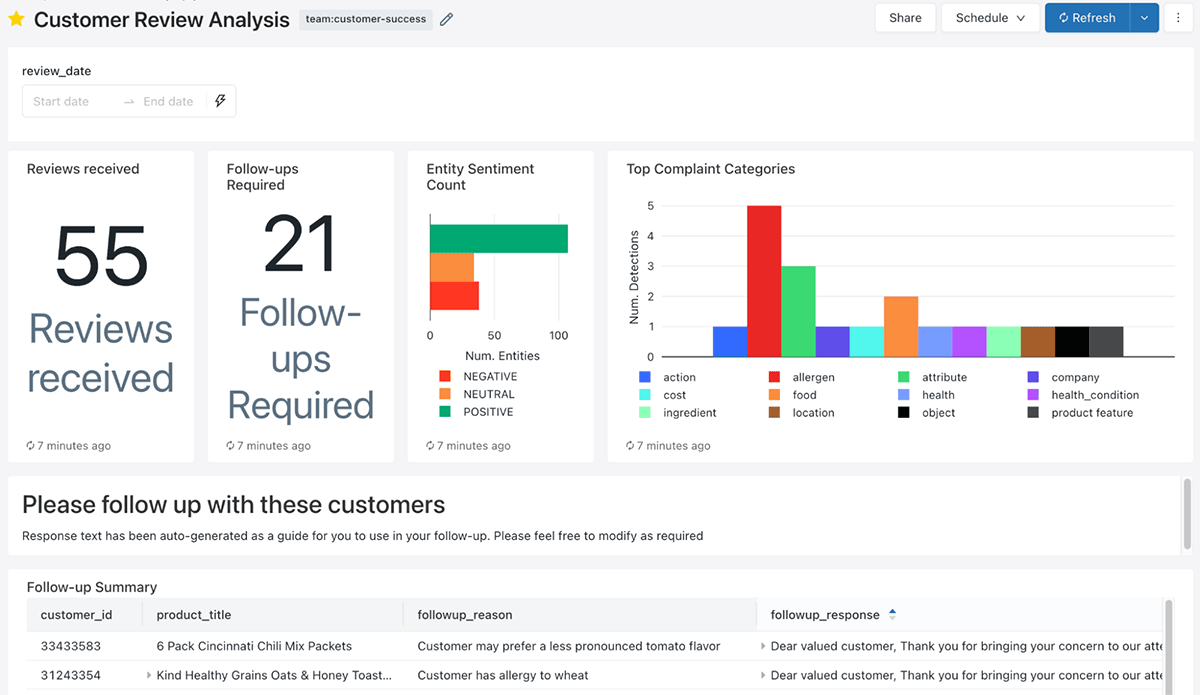

Now when Susan reaches operate in the early morning, she’s welcomed with a control panel that points her to which consumers she must be hanging around with and why. She’s even offered with starter messages to build on!

To a lot of Susan’s coworkers, this appears like magic! Every magic technique has a trick, and the trick here is AI_GENERATE_TEXT() and how simple it makes using LLMs to your Lakehouse. The Lakehouse has actually been working behind the scenes to centralise evaluations from numerous information sources, appointing indicating to the information, and suggesting next finest actions

Let’s wrap up the crucial advantages for Susan’s company:

- They are instantly able to use AI to their information without the weeks needed to train, develop, and operationalise a design

- Experts and designers can engage with this design through utilizing familiar SQL abilities

You can use these SQL works to the totality of your Lakehouse such as:

- Categorizing information in real-time with Delta Live Tables

- Construct and disperse real-time SQL Alerts to caution on increased unfavorable belief activity for a brand name

- Recording item belief in Function Shop tables that back their real-time serving designs

Locations for factor to consider

While this workflow brings instant worth to our information without the requirement to train and keep our own designs, we require to be cognizant of a couple of things:

- The crucial to a precise action from an LLM is a sound and in-depth timely. For instance, in some cases the purchasing of your guidelines and declarations matters. Guarantee you occasionally tweak your triggers. You might invest more time engineering your triggers than composing your SQL reasoning!

- LLM actions can be non-deterministic. Setting the temperature level to 0 will make the actions more deterministic, however it’s never ever an assurance. For that reason, if you are recycling information, the output for formerly processed information might vary. You can utilize Delta Lake’s time travel and alter information feed includes to recognize transformed actions and resolve them appropriately

- In addition to incorporating LLM services, Databricks likewise makes it simple to develop and operationalise LLMs that you own and are fine-tuned on your information. For instance, discover how we constructed Dolly You can utilize these in combination with AI Functions to develop insights genuinely distinct to your company

What next?

Every day the neighborhood is showcasing brand-new innovative usages of triggers. What innovative usages can you use to the information in your Databricks Lakehouse?

- Register For the general public Sneak Peek of AI Functions here

- Check out the docs here

- Follow in addition to our demonstration at dbdemos.ai

- Take A Look At our Webinar covering how to develop your own LLM like Dolly here!