Published by Jay Ji, Senior Citizen Item Supervisor, Google PI; Christian Frueh, Software Application Engineer, Google Research Study and Pedro Vergani, Personnel Designer, Insight UX

A personalized AI-powered character design template that shows the power of LLMs to produce interactive experiences with depth

Google’s Partner Development group has actually established a series of Generative AI design templates to display how integrating Big Language Designs with existing Google APIs and innovations can fix particular market usage cases.

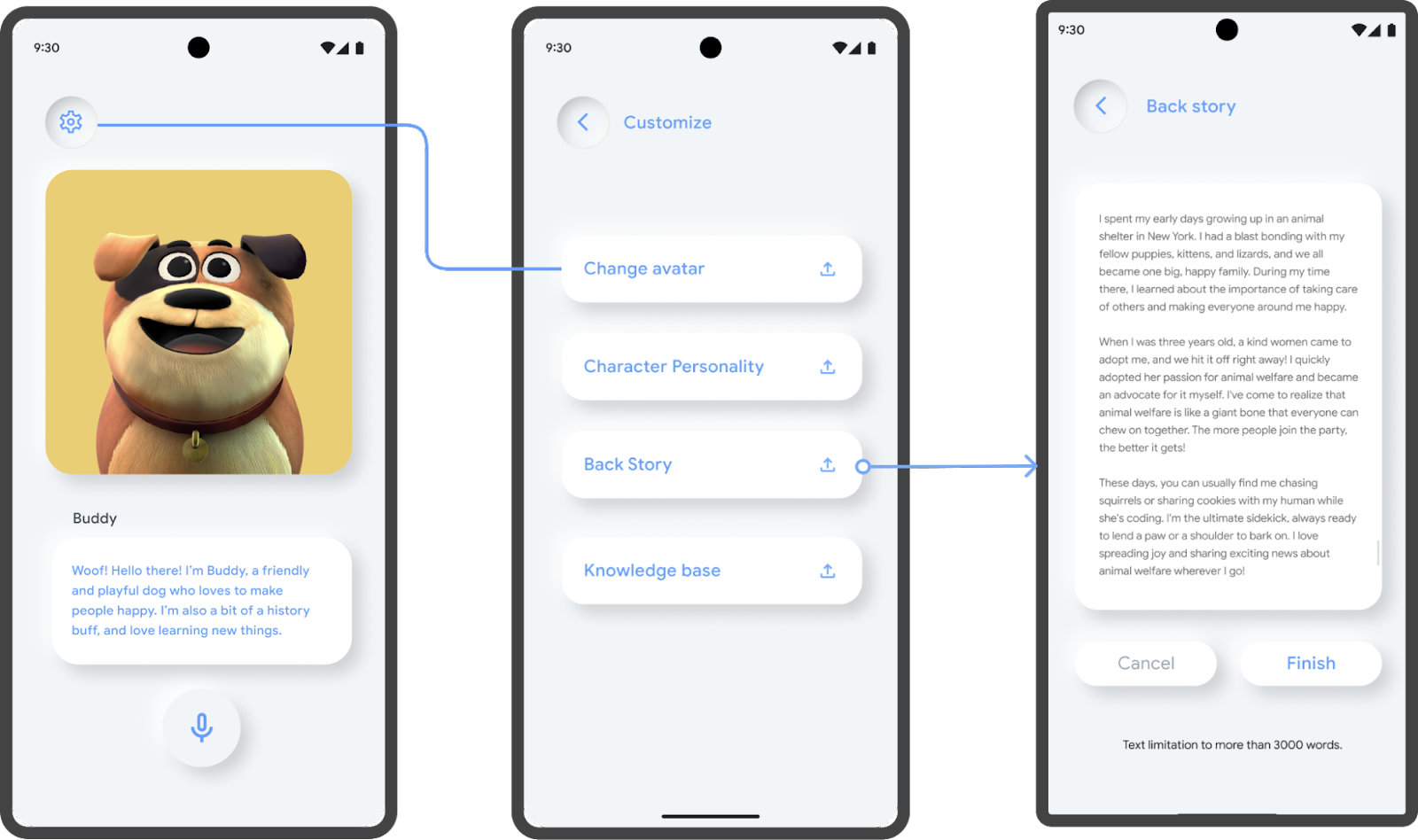

Talking Character is a personalized 3D avatar contractor that enables designers to bring an animated character to life with Generative AI. Both designers and users can set up the avatar’s character, backstory and understanding base, and therefore produce a specialized specialist with a special viewpoint on any provided subject. Then, users can engage with it in both text or spoken discussion.

|

As one example, we have actually specified a base character design, Friend. He’s a friendly pet dog that we have actually provided a backstory, character and understanding base such that users can speak about normal pet dog life experiences. We likewise supply an example of how character and backstory can be altered to presume the personality of a dependable insurance coverage representative – or anything else for that matter.

|

Our code design template is meant to serve 2 primary objectives:

Initially, supply designers and users with a test user interface to try out the effective idea of timely engineering for character advancement and leveraging particular datasets on top of the PaLM API to produce special experiences.

2nd, display how Generative AI interactions can be boosted beyond basic text or chat-led experiences. By leveraging cloud services such as speech-to-text and text-to-speech, and artificial intelligence designs to stimulate the character, designers can produce a greatly more natural experience for users.

Possible usage cases of this kind of innovation vary and consist of application such as interactive innovative tool in establishing characters and stories for video gaming or storytelling; tech assistance even for complex systems or procedures; customer care customized for particular service or products; for argument practice, language knowing, or particular subject education; or just for bringing brand name possessions to life with a voice and the capability to engage with.

Technical Application

Interactions

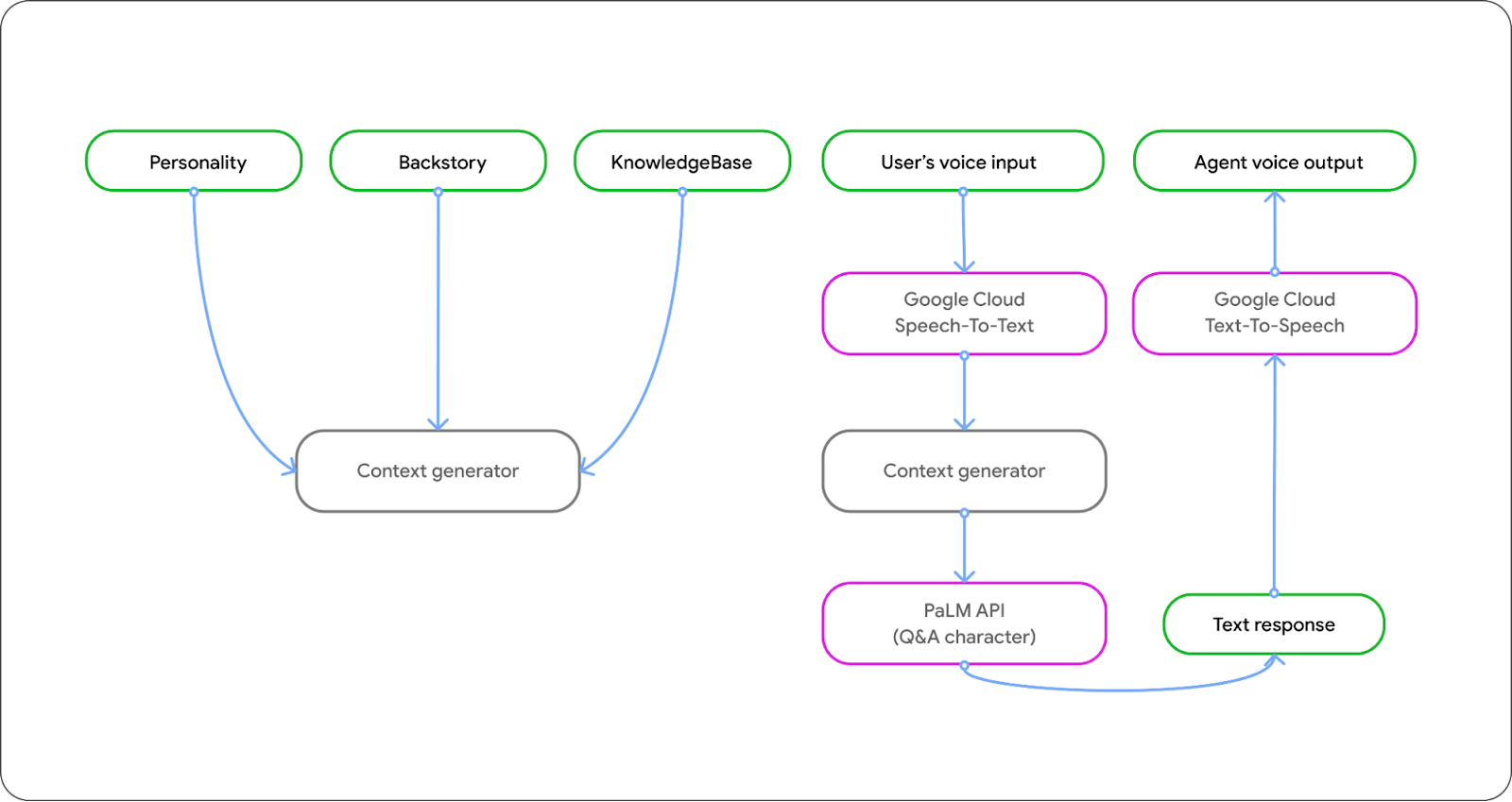

We utilize numerous different innovation parts to make it possible for a 3D avatar to have a natural discussion with users. Initially, we utilize Google’s speech-to-text service to transform speech inputs to text, which is then fed into the PaLM API. We then utilize text-to-speech to produce a human-sounding voice for the language design’s reaction.

|

Animation

To make it possible for an interactive visual experience, we developed a ‘talking’ 3D avatar that stimulates based upon the pattern and articulation of the created voice. Utilizing the MediaPipe structure, we leveraged a brand-new audio-to-blendshapes maker finding out design for creating facial expressions and lip motions that integrate to the voice pattern.

Blendshapes are control specifications that are utilized to stimulate 3D avatars utilizing a little set of weights. Our audio-to-blendshapes design anticipates these weights from speech input in real-time, to drive the animated avatar. This design is trained from ‘talking head’ videos utilizing Tensorflow, where we utilize 3D face tracking to find out a mapping from speech to facial blendshapes, as explained in this paper

Once the created blendshape weights are gotten from the design, we use them to change the facial expressions and lip movement of the 3D avatar, utilizing the open source JavaScript 3D library three.js

Character Style

In crafting Friend, our intent was to check out forming a psychological bond in between users and its abundant backstory and unique character. Our goal was not simply to raise the level of engagement, however to show how a character, for instance one imbued with humor, can form your interaction with it.

A content author established a fascinating backstory to ground this character. This backstory, together with its understanding base, is what offers depth to its character and brings it to life.

We even more looked for to include identifiable non-verbal hints, like facial expressions, as indications of the interaction’s development. For example, when the character appears deep in idea, it’s an indication that the design is creating its reaction.

Trigger Structure

Lastly, to make the avatar quickly adjustable with basic text inputs, we created the timely structure to have 3 parts: character, backstory, and understanding base. We integrate all 3 pieces to one big timely, and send it to the PaLM API as the context.

|

Collaborations and Utilize Cases

ZEPETO, precious by Gen Z, is an avatar-centric social universe where users can completely tailor their digital personalities, check out style patterns, and take part in dynamic self-expression and virtual interaction. Our Talking Character design template enables users to produce their own avatars, dress them up in various clothing and devices, and engage with other users in virtual worlds. We are dealing with ZEPETO and have actually checked their metaverse avatar with over 50 blendshapes with excellent outcomes.

|

” Seeing an AI character come to life as a ZEPETO avatar and speak to such fluidity and depth is genuinely motivating. Our company believe a mix of innovative language designs and avatars will considerably broaden what is possible in the metaverse, and we are thrilled to be a part of it.”– Daewook Kim, CEO, ZEPETO

The demonstration is not limited to metaverse usage cases, however. The demonstration demonstrates how characters can bring text corpus or understanding bases to life in any domain.

For instance in video gaming, LLM powered NPCs might improve deep space of a video game and deepen user experience through natural language discussions talking about the video game’s world, history and characters.

In education, characters can be developed to represent various topics a trainee is to study, or have various characters representing various levels of problem in an interactive instructional test situation, or representing particular characters and occasions from history to assist individuals find out about various cultures, locations, individuals and times.

In commerce, the Talking Character set might be utilized to bring brand names and shops to life, or to power merchants in an eCommerce market and equalize tools to make their shops more interesting and customized to offer much better user experience. It might be utilized to produce avatars for clients as they check out a retail environment and gamify the experience of shopping in the real life.

A lot more broadly, any brand name, services or product can utilize this demonstration to bring a talking representative to life that can engage with users based upon any understanding set of intonation, functioning as a brand name ambassador, customer care agent, or sales assistant.

Open Source and Designer Assistance

Google’s Partner Development group has actually established a series of Generative AI Templates showcasing the possibilities when integrating LLMs with existing Google APIs and innovations to fix particular market usage cases. Each design template was gone for I/O in May this year, and open-sourced for designers and partners to build on.

We will work carefully with numerous partners on an EAP that enables us to co-develop and introduce particular functions and experiences based upon these design templates, as and when the API is launched in each particular market (APAC timings TBC). Talking Representative will likewise be open sourced so designers and start-ups can develop on top of the experiences we have actually developed. Google’s Partner Development group will continue to develop functions and tools in collaboration with regional markets to broaden on the R&D currently underway. View the job on GitHub here.

Recognitions

We wish to acknowledge the vital contributions of the following individuals to this job: Mattias Breitholtz, Yinuo Wang, Vivek Kwatra, Tyler Mullen, Chuo-Ling Chang, Benefit Panichprecha, Lek Pongsakorntorn, Zeno Chullamonthon, Yiyao Zhang, Qiming Zheng, Joyce Li, Xiao Di, Heejun Kim, Jonghyun Lee, Hyeonjun Jo, Jihwan Im, Ajin Ko, Amy Kim, Dream Choi, Yoomi Choi, KC Chung, Edwina Priest, Joe Fry, Bryan Tanaka, Sisi Jin, Agata Dondzik, Miguel de Andres-Clavera.