Latency matters in artificial intelligence applications. In high-latency circumstances, scams goes undiscovered triggering millions in losses, security vulnerabilities are left untreated offering assaulters an open door, suggestions stop working to integrate the most recent user interactions ending up being unimportant. The 2022 Uber Hack revealed the world that business are still really susceptible to socially crafted attacks and having the ability to rapidly discover anomalous habits like IP address scanning within seconds instead of hours can make all the distinction.

Real-time artificial intelligence (ML) includes releasing and preserving artificial intelligence designs to carry out on-demand forecasts for usage cases like item suggestions, ETA forecasting, scams detection and more. In real-time ML, the freshness of the functions, the serving latency, and the uptime and accessibility of the information pipeline and design matter. Deciding late has functional and expense implications.

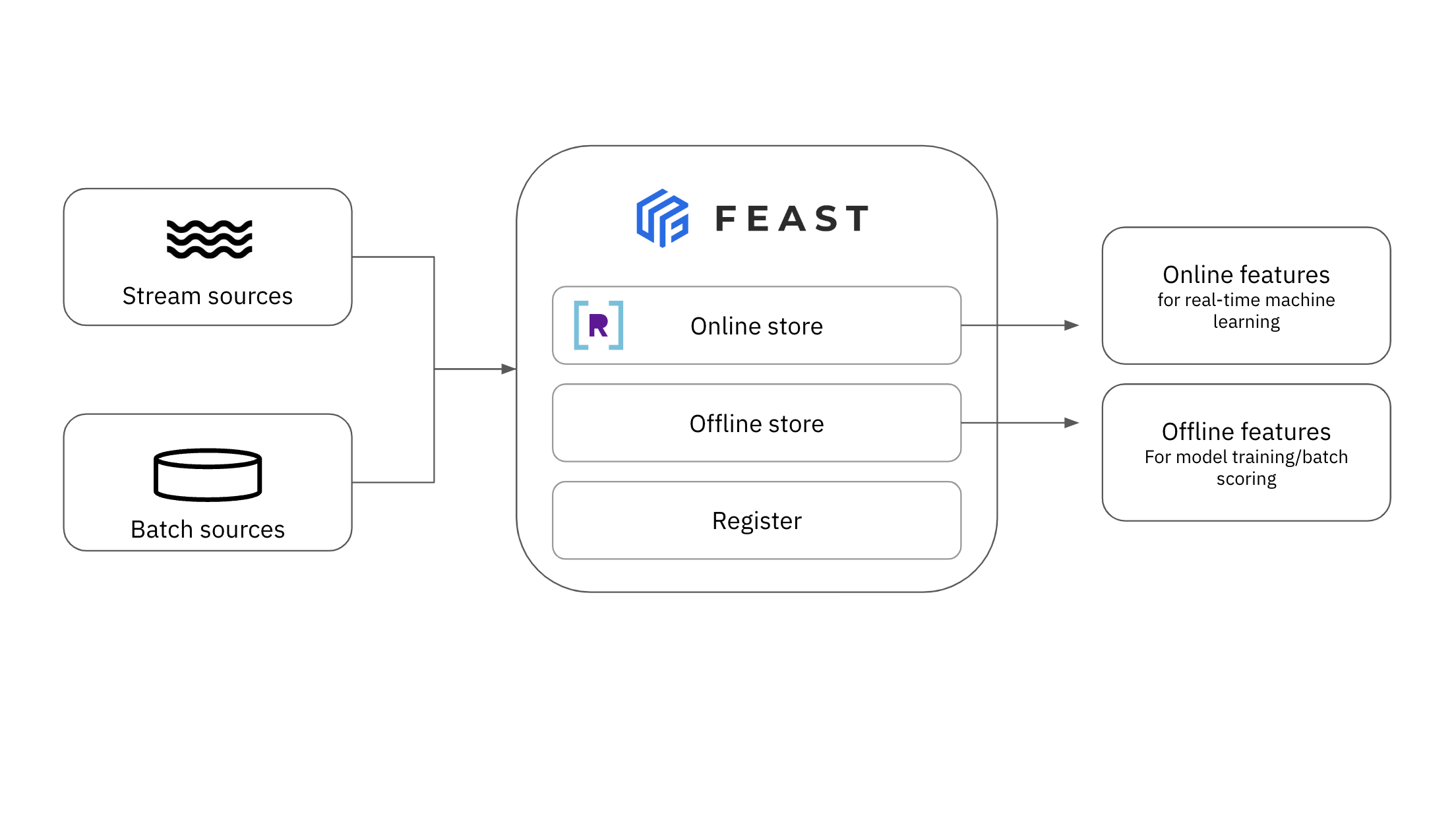

To much better serve real-time artificial intelligence, Rockset incorporates with the Banquet Function Shop which serves as a central platform for releasing, keeping track of and handling production ML functions. The function shop is among numerous tools that have actually been produced to support shipping and supporting designs in production. A location of proficiency just recently created MLOps. The objective of the function shop is to merge the set of functions offered for training and serving throughout a company. With function shops, various groups have the ability to train and release on standardized functions instead of being siloed off and creating comparable functions by themselves. Much like how a git repo lets an engineering group usage and customize the exact same swimming pool of code, a function repo lets individuals share and handle the exact same set of functions.

In addition to standardizing how functions are kept and produced, function shops can likewise assist monitor your training information. By watching on the quality of the information being utilized to produce the functions you can include a brand-new layer of defense to prevent training a bad design (trash in, trash out as they state).

Here are a few of the advantages of embracing a function shop like Banquet:

- Function Management: deduplicate and standardize functions throughout a company

- Function Calculation: emerge functions in a deterministic method

- Function Recognition: carry out recognition on functions to prevent training on “scrap” information

Now you may believe “Wow, that sounds a lot like emerged views. How do function shops vary from basic analytical databases?” Well, that’s a little bit of a technique concern. Function shops assist offer ML orchestration and frequently take advantage of several databases for design training and serving. Here are the advantages you receive from utilizing Rockset as the database for real-time ML:

- Real-time, streaming information for ML: Rockset manages real-time streaming information for artificial intelligence with compute-compute separation, separating streaming consume and query calculate for foreseeable efficiency even in the face of high-volume composes and low latency checks out.

- Turn occasions into real-time functions: Rockset turns occasions into functions in genuine time with SQL consume improvements. Effectively calculate time-windowed aggregation functions, within 1-2 seconds of when the information was produced.

- Serve real-time functions with millisecond-latency: Rockset utilizes its Converged Index to serve functions to applications in milliseconds.

- Make sure service-levels at scale: Rockset satisfies the rigorous latency requirements of real-time analytics and is developed for high accessibility and sturdiness without any set up downtime.

While Rockset can be utilized as a stand-alone function shop for real-time ML, in today’s demonstration we’re going to stroll through how to utilize Rockset with the Banquet Function Shop which is customized to make artificial intelligence function management a breeze.

Discover More about how Rockset extends its real-time analytics abilities to artificial intelligence. Sign Up With VP of Engineering Louis Brandy and item supervisor John Solitario for the talk From Spam Combating at Facebook to Vector Browse at Rockset: How to Construct Real-Time Artificial Intelligence at Scale on May 17th.

Summary of the Banquet Combination

Banquet is among the most popular function shops out there and is open sourced and backed by Tecton, the function platform for artificial intelligence. Banquet supplies the capability to train designs on a constant set of functions and separates storage out as an abstraction permitting design training to be portable. Together with hosting offline functions for batch training, Banquet likewise supports online functions, so users can rapidly bring emerged functions as input for a qualified design utilized for real-time forecast.

Just recently, Rockset incorporated with the popular open source Banquet Function Shop as a neighborhood contributed online shop. Rockset is a terrific suitable for serving functions in production as the database is purpose-built for real-time intake and millisecond-latency questions.

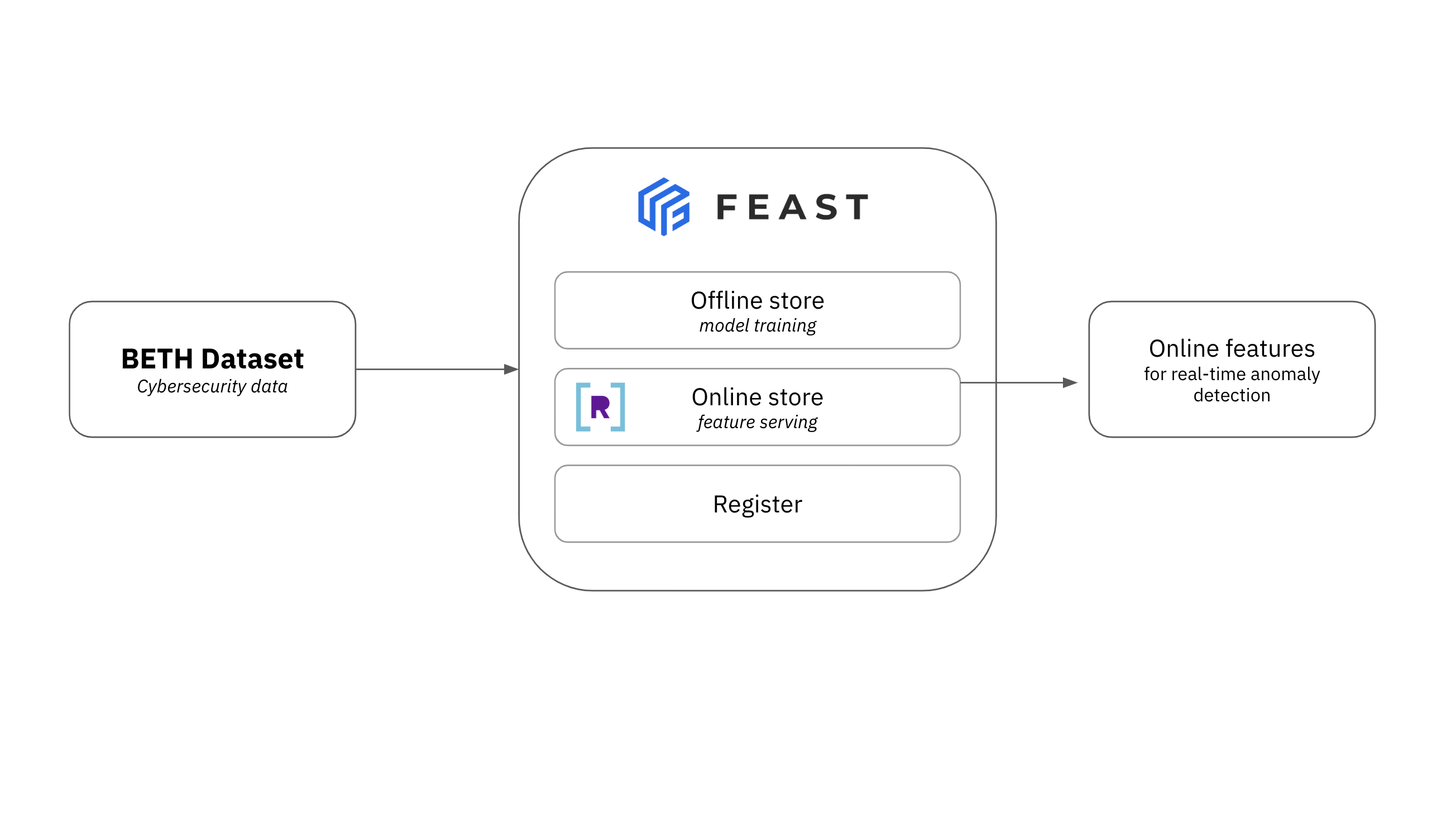

Real-Time Abnormality Detection with Banquet and Rockset

One typical usage case that needs real-time function serving is anomaly detection. By discovering abnormalities in genuine time, instant actions can be required to reduce threat and avoid damage.

In this example, provided some service logs we wish to have the ability to rapidly draw out functions and pipeline them into a design that will then produce output showing a hazard likelihood. We display how to serve functions in Rockset utilizing the BETH Dataset, a cybersecurity dataset with 8M+ information points that was purpose-built for anomaly detection training. Benign and wicked kernel and network activity information was gathered utilizing a honeypot, in this case a server established with low level tracking tools that enabled gain access to with any ssh secret. After gathering information, each occasion in the dataset was by hand identified “sus” for uncommon habits or “wicked” for destructive habits. We can think of training a design offline on this dataset and after that carrying out design forecast on an actual time activity log to anticipate continuous levels of danger.

Link Banquet to Rockset

Very first let’s set up Feast/Rockset:

pip set up banquet

pip set up rockset

And after that initialize the banquet repo:

banquet init -t rockset anomaly_feast_project

You will be triggered for an API secret and a host url which you can discover in the Rockset console Additionally you can leave these blank and set the environment variables explained listed below. If we enter into the produced task:

cd anomaly_feast_project/ feature_repo.

We will discover our feature_store. yaml config file. Let’s upgrade this file to indicate our Rockset account. Following the Banquet referral guide for Rockset, fill in the feature_store. yaml file:

task: anomaly_feast_project.

computer system registry: data/registry. db.

service provider: regional.

online_store:.

type: rockset.

api_key: << YOUR_APIKEY>>.

host: << REGION_ENDPOINT_URL>>.

If we offered input to the previous initialization triggers we need to currently see our worths here. If we wish to upgrade this we can produce an API type in the Rockset console in addition to bring the Area Endpoint URL( host). Keep in mind: If api_key or host in feature_store. yaml is left empty, the motorist will try to get these worths from regional environment variables ROCKSET_APIKEY and ROCKSET_APISERVER

Getting Functions for Real-Time Abnormality Detection

Now download the abnormality detection dataset to the information/ directory site. We will utilize among the apply for the demonstration however the actions listed below can be used to all files. There are 2 kinds of information kept by this dataset: kernel-level procedure calls and network traffic. Let’s examine the procedure calls.

cd information && & & cp ~/ Downloads/archive. zip information && & & unzip archive.zip && & & cd -.

View among the information files we have actually downloaded as an example:

import pandas as pd.

df = pd.read _ csv(" data/labelled _ 2021may-ip-10-100-1-186. csv").

print( df).

See all of the kernel procedure requires security analysis:

timestamp processId parentProcessId userI processName hostName eventId eventName argsNum returnValue args sus evil.

0 124.952820 383 1 101 systemd-resolve ip-10-100-1-186 41 socket 3 15[{'name': 'domain', 'type': 'int', 'value': 'A... 0 0

1 124.953139 380 1 100 systemd-network ip-10-100-1-186 41 socket 3 15 [{'name': 'domain', 'type': 'int', 'value': 'A... 0 0

2 124.953424 1 0 0 systemd ip-10-100-1-186 1005 security_file_open 4 0 [{'name': 'pathname', 'type': 'const char*', '... 0 0

3 124.953464 1 0 0 systemd ip-10-100-1-186 257 openat 4 17 [{'name': 'dirfd', 'type': 'int', 'value': -10... 0 0

4 124.953494 1 0 0 systemd ip-10-100-1-186 5 fstat 2 0 [{'name': 'fd', 'type': 'int', 'value': 17}, {... 0 0

... ... ... ... ... ... ... ... ... ... ... ... ... ...

713862 16026.611442 159 1 0 systemd-journal ip-10-100-1-186 1005 security_file_open 4 0 [{'name': 'pathname', 'type': 'const char*', '... 0 0

713863 16026.611475 159 1 0 systemd-journal ip-10-100-1-186 257 openat 4 34 [{'name': 'dirfd', 'type': 'int', 'value': -10... 0 0

713864 16026.611515 159 1 0 systemd-journal ip-10-100-1-186 5 fstat 2 0 [{'name': 'fd', 'type': 'int', 'value': 34}, {... 0 0

713865 16026.611582 159 1 0 systemd-journal ip-10-100-1-186 257 openat 4 -2 [{'name': 'dirfd', 'type': 'int', 'value': -10... 0 0

713866 16026.619387 506 1 104 rs:main Q:Reg ip-10-100-1-186 62 kill 2 0 [{'name': 'pid', 'type': 'pid_t', 'value': 506... 0 0

Ok, we have the imported data. Letâs write some code that will generate interesting features by creating a feature definition file anomaly_detection_repo.py. This file declares entities, logical objects described by a set of features, and feature views, a group of features associated with zero or more entities. You can read more on feature definition files here. For our demo setup we will use the processName, processId and eventName features collected in the kernel-process logs as our online features.

import pandas as pd

from datetime import timedelta

from feast import Entity, FeatureView, Field, PushSource, ValueType, FileSource

from feast.types import String, Int64

import time

def sanitize_and_write_to_parquet(csv_path: str, parquet_path: str):

# The timestamps for this dataset are logical and do not correspond to

# datetimes. For Feast to understand, we convert to a recent datetime that we

# can easily materialize.

df = pd.read_csv(csv_path)

timeval = time.time() - 5000 + df['timestamp'] astype( float).

df['timestamp']= pd.to _ datetime( timeval, system=" s")

df.to _ parquet( course= parquet_path, allow_truncated_timestamps= Real, coerce_timestamps=" ms")

return df.

csv_file_path="data/labelled _ 2021may-ip-10-100-1-186. csv".

parquet_path = csv_file_path. split(".")[0] + "parquet".

df = sanitize_and_write_to_parquet( csv_file_path, parquet_path).

user_entity = Entity(.

name=" user",

description=" User that carried out network demand.",.

value_type= ValueType.INT64,.

join_keys =["userId"]

).

network_stats_source = FileSource(.

name=" security_honeypot_data_source",

course= parquet_path,.

timestamp_field=" timestamp",

).

push_source = PushSource(.

name=" anomaly_stats_push_source",

batch_source= network_stats_source,.

).

bpf_feature_view = FeatureView(.

name=" user_network_request_features",

entities =[user_entity],.

ttl= timedelta( hours= 50),.

schema =[

Field(name="processName", dtype=String),

Field(name="processId", dtype=Int64),

Field(name="eventName", dtype=String),

Field(name="sus", dtype=Int64),

Field(name="evil", dtype=Int64),

],.

online= Real,.

source= push_source,.

).

We can use recently composed function meanings by conserving them to the repo utilizing banquet use

Serve Functions in Milliseconds

In Banquet, occupying the online shop includes emerging over a long time frame from the offline shop where the most recent worths for a function will be taken. As soon as the emerged functions have actually been packed to the online shop we need to have the ability to query these functions within the namespace of their Function View. Let’s launch the Banquet Function Server, emerge some online functions and inquiry! Initially, write a little script to begin the server:

from banquet import FeatureStore.

from datetime import datetime.

def run_server():.

shop = FeatureStore( repo_path=".")

# Emerge all information within our ttl (50 hours) from the offline( parquet file).

# shop to online shop( Rockset).

store.materialize _ incremental( end_date= datetime.utcnow()).

store.serve( host=" 127.0.0.1", port= 6566, type _=" http", no_access_log= Real, no_feature_log= Real).

if __ name __ == "__ primary __":.

run_server().

After beginning our script, let’s query some input includes that would get passed to our experienced detection design:

curl -X POST.

" http://localhost:6566/get-online-features".

- d' {

" functions": [

"kernel_activity_features:processName",

"kernel_activity_features:processId",

"kernel_activity_features:eventName"

],.

" entities": {

" userId":[0]

}

}'|jq.

Action:.

{

" metadata": {

" feature_names":[

"userId",

"processName",

"eventName",

"processId"

]

},.

" outcomes": [

{

"values": [

0

],.

" statuses": [

"PRESENT"

],.

" event_timestamps":[

"1970-01-01T00:00:00Z"

]

},.

{

" worths": [

"systemd"

],.

" statuses": [

"PRESENT"

],.

" event_timestamps":[

"2023-03-29T16:51:02.633Z"

]

},.

...Which’s it! We can now serve our functions from views which are each backed by a Rockset collection that is queryable with sub-second latency.

Real-time Artificial Intelligence with Rockset

Function Stores, consisting of Banquet, have actually ended up being an important part of the real-time device finding out information pipeline. With Rockset’s brand-new combination with Banquet, you can utilize Rockset as an online function shop and serve functions for real-time customization, anomaly detection, logistics tracking applications and more.

Rockset is presently offered as an online shop for Banquet and you can have a look at the code here Get going with the combination and real-time device finding out with $300 in complimentary Rockset credits. Delighted hacking â

Rockset includes assistance for vector look for real-time customization, suggestions and anomaly detection. Find out more about how to utilize vector search on the Rockset blog site